Eryk Salvaggio is a researcher and new media artist interested in the social and cultural impacts of artificial intelligence. His work, which is centered in creative misuse and the right to refuse, critiques the mythologies and ideologies of tech design that ignore the gaps between datasets and the world they claim to represent. A blend of hacker, policy researcher, designer and artist, he has been published in academic journals, spoken at music and film festivals, and consulted on tech policy at the national level.

Ghosts in the Archives Become Ghosts in the Machines

I’m honored to be joining the Flickr Foundation to imagine the next 100 years of Flickr, thinking critically about the relationships between datasets, history, and archives in the age of generative AI.

AI is thick with stories, but we tend to only focus on one of them. The big AI story is that, with enough data and enough computing power, we might someday build a new caretaker for the human race: a so-called “superintelligence.” While this story drives human dreams and fears—and dominates the media sphere and policy imagination—it obscures the more realistic story about AI: what it is, what it means, and how it was built.

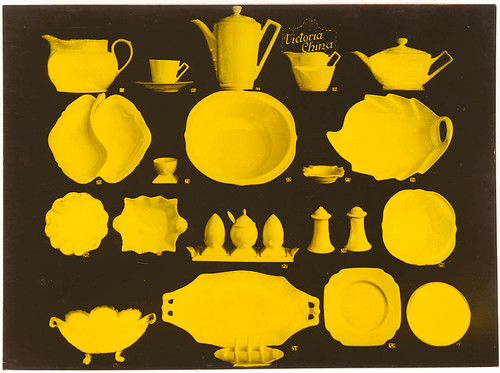

The invisible stories of AI are hidden in its training data. They are human: photographs of loved ones, favorite places, things meant to be looked at and shared. Some of them are tragic or traumatic. When we look at the output of a large language model (LLM), or the images made by a diffusion model, we’re seeing a reanimation of thousands of points of visual data — data that was generated by people like you and me, posting experiences and art to other people over the World Wide Web. It’s the story of our heritage, archives and the vast body of human visual culture.

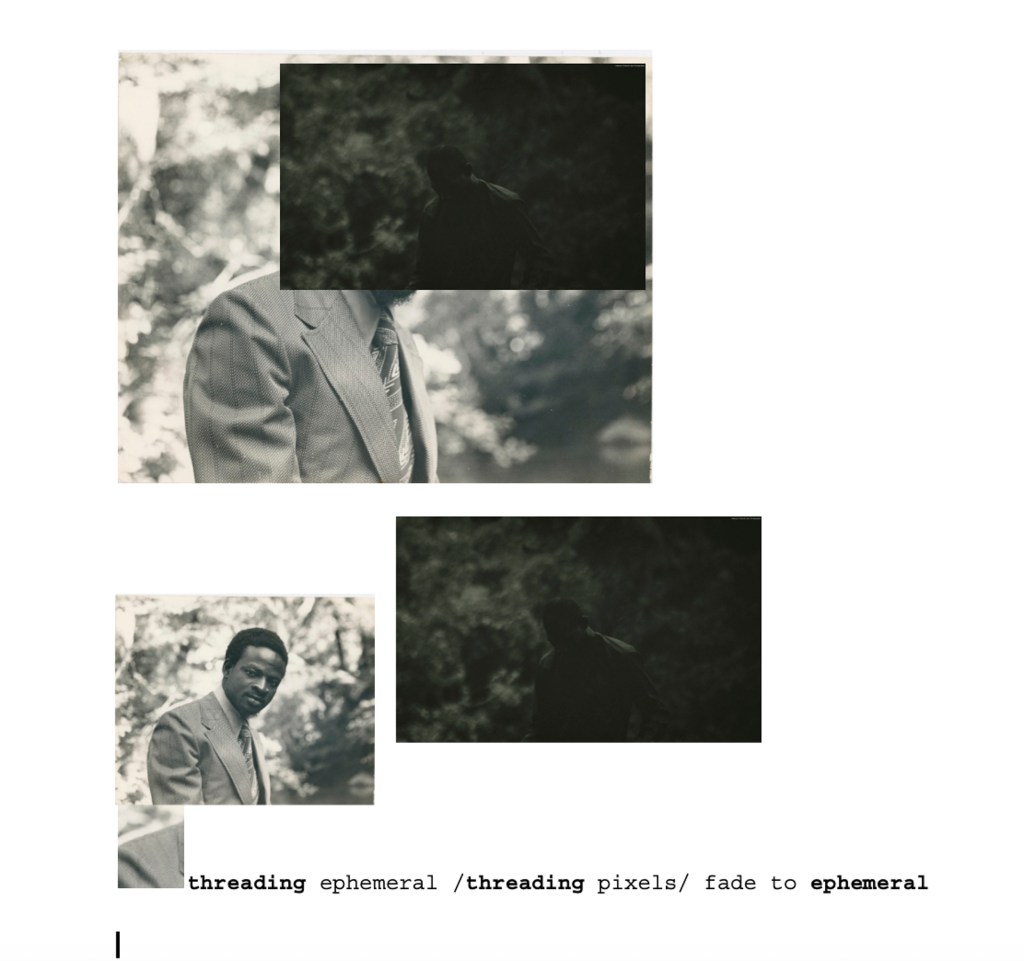

I approach generated images as a kind of seance, a reanimation of these archives and data points which serve as the techno-social debris of our past. These images are broken down — diffused — into new images by machine learning models. But what ghosts from the past move into the images these models make? What haunts the generated image from within the training data?

In “Seance of the Digital Image” I began to seek out the “ghosts” that haunt the material that machines use to make new images. In my residency with the Flickr Foundation, I’ll continue to dig into training data — particularly, the Flickr Commons collection — to see the ways it shapes AI-generated images. These will not be one to one correlations, because that’s not how these models work.

So how do these diffusion models work? How do we make an image with AI? The answer to this question is often technical: a system of diffusion, in which training images are broken down into noise and reassembled. But this answer ignores the cultural component of the generated image. Generative AI is a product of training datasets scraped from the web, and entangled in these datasets are vast troves of cultural heritage data and photographic archives. When training data-driven AI tools, we are diffusing data, but we are also diffusing visual culture.

Eryk Salvaggio: Flowers Blooming Backward Into Noise (2023) from ARRG! on Vimeo.

In my research, I have developed a methodology for “reading” AI-generated images as the products of these datasets, as a way of interrogating the biases that underwrite them. Since then, I have taken an interest in this way of reading for understanding the lineage, or genealogy, of generated images: what stew do these images make with our archives? Where does it learn the concept of what represents a person, or a tree, or even an archive? Again, we know the technical answer. But what is the cultural answer to this question?

By looking at generated images and the prompts used to make them, we’ll build a way to map their lineages: the history that shapes and defines key concepts and words for image models. My hope is that this endeavor shows us new ways of looking at generated images, and to surface new stories about what such images mean.

As the tech industry continues building new infrastructures on this training data, our window of opportunity for deciding what we give away to these machines is closing, and understanding what is in those datasets is difficult, if not impossible. Much of the training data is proprietary, or has been taken offline. While we cannot map generated images to their true training data, massive online archives like Flickr give us insight into what they might be. Through my work with the Flickr Foundation, I’ll look at the images from institutions and users to think about what these images mean in this generated era.

In this sense, I will interrogate what haunts a generated image, but also what haunts the original archives: what stories do we tell, and which do we lose? I hope to reverse the generated image in a meaningful way: to break the resulting image apart, tackling correlations between the datasets that train them, the archives that built those datasets, and the images that emerge from those entanglements.